... when birds manipulate humans, and ants can estimate Pi.

Writer Kevin Kelly has a very interesting post on intelligence in nature.

I would recommend to go there for the collection of amazing facts, and the quality of the writing, but I have some reserves on the general vision of intelligence I think he's putting forward.

23/04/2009

16/04/2009

Finir sa thèse ! (Finishing one's thesis)

Beautiful lyrics, brilliant and fun (mmm..chords borrowed from Blu Cantrell it seems)

Le Minotaure

The author/singer/guitar player, Simon Berjeaut, is just ridiculously talented.

There is definitly some Brassens here, and a touch of Renaud but without the crude voice and lyrics.

Taken together with some recent discoveries heard on this radio, no, really, I think there's hope again for French music !

Le Minotaure

The author/singer/guitar player, Simon Berjeaut, is just ridiculously talented.

There is definitly some Brassens here, and a touch of Renaud but without the crude voice and lyrics.

Taken together with some recent discoveries heard on this radio, no, really, I think there's hope again for French music !

13/04/2009

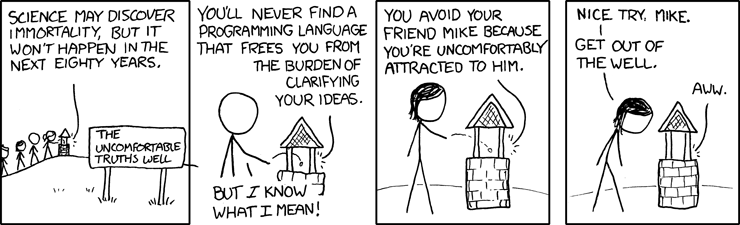

xkcd, graciously smart..

..and "a treasured and carefully-guarded point in the space of four-character strings" according to its creator Randall Munroe.

12/04/2009

Lexical semantics (3/4)

How does it work?

We should be a little more careful in the way we phrase this question.

More specifically, what we want to describe in this post is: "How does the meaning of a word become available upon its (say, visual) presentation?".

There are a number of computational ways to tackle this question, and an important one is the PDP connectionist approach championned by researchers like Jay McClelland, Mark Seidenberg, David Plaut, David Rumelhart and Caralyn Patterson. All PDP models share the assumption that words are mappings between different codes; visual codes, sound codes and meaning codes. In neural network terms, this means that words are embodied in con nections between different subsystems of units. To my knowledge, the most recent PDP model was proposed by Plaut and Booth (2000) and it uses a fully recurrent network for the meaning subsystem: that is to say, a subnetwork in which all units are connected to each other. Recurrent networks are dynamical systems which exhibit attractor dynamics: the meaning of a word corresponds to an attractor of the system, i.e. a state towards which the system will naturally converge if it starts close enough. The central disk in the picture is an attractor in the state space of a system evolving under discrete time steps: each fan corresponds to many starting states being mapped to one, but only one point (the center of the disk) maps to itself -there's no escaping from it and it attracts all other states.

nections between different subsystems of units. To my knowledge, the most recent PDP model was proposed by Plaut and Booth (2000) and it uses a fully recurrent network for the meaning subsystem: that is to say, a subnetwork in which all units are connected to each other. Recurrent networks are dynamical systems which exhibit attractor dynamics: the meaning of a word corresponds to an attractor of the system, i.e. a state towards which the system will naturally converge if it starts close enough. The central disk in the picture is an attractor in the state space of a system evolving under discrete time steps: each fan corresponds to many starting states being mapped to one, but only one point (the center of the disk) maps to itself -there's no escaping from it and it attracts all other states.

This contrasts with another connectionist variant supported by researchers like Jonathan Grainger, Max Coltheart and Colin Davis to cite a few. These also use neural network models, but words are now envisaged as units by themselves, not as connections between units. Access to a word's meaning is modelled as the activation of a dedicated meaning unit, exactly like access to a word's orthography activates a dedicated orthography unit. Although such systems have been described for instance in Carreiras et al (1997) or Coltheart et al (2001), to my knowledge an implementation has yet to be provided. The structure of such "localist" semantic networks has also been studied more recently, for instance in Steyvers and Tennenbaum (2005) (left picture), but this was done by abstracting away any network dynamics and thus any question about lexical access.

Yet another connectionist approach, more developmental and certainly more easily related to the brain, is the self-organising approach. Again we are talking about mappings b etween orthographic, semantic and phonological codes, but here codes of each type are supported by particular subsystems called self-organising maps (see right image), and these communicate with one another through hebbian connections. Examples of this approach include Mikkulainen (1993), Li et al (2004) and Mayor and Plunkett (2008). These models take a little bit from both worlds. Indeed, access to meaning corresponds to the apparition of a pattern of activation in the semantic map, an activation pattern which is distributed across all units like in the PDP approach, but which is centered on a so-called "best-matching" one like in the localist approach. These models usually put the emphasis on developmental and neural plausibility, and they are not yet ripe (or not upgraded) for the modeling of adult lexical decision or semantic categorisation tasks.

etween orthographic, semantic and phonological codes, but here codes of each type are supported by particular subsystems called self-organising maps (see right image), and these communicate with one another through hebbian connections. Examples of this approach include Mikkulainen (1993), Li et al (2004) and Mayor and Plunkett (2008). These models take a little bit from both worlds. Indeed, access to meaning corresponds to the apparition of a pattern of activation in the semantic map, an activation pattern which is distributed across all units like in the PDP approach, but which is centered on a so-called "best-matching" one like in the localist approach. These models usually put the emphasis on developmental and neural plausibility, and they are not yet ripe (or not upgraded) for the modeling of adult lexical decision or semantic categorisation tasks.

Finally we have semantic space models. These are not connectionist models: they don't need any neural network to be desc ribed. Moreover these models say nothing about the interaction between orthography, semantics and phonology, and hence they should not be seen as lexical access models, but rather as models of how humans build and represent lexical semantic information. The common hypothesis made by the tenants of this approach is that semantic codes are large vectors (they live in a high-dimensional semantic space), computed by more or less complicated co-occurence statistics in the language environment. Examples include Landauer and Dumais (1997)'s LSA model, and more recently Jones and Mewhort (2007)'s Beagle model (left picture). The focus of interest here lies in the internal organisation of the model: the topology of the semantic space -how related word meanings come to be represented in similar patches of the semantic space (the picture shows a 2D-projection of a semantic space in which related words are clustered together). Because they make no commitment as to how lexical access occurs, semantic spaces could theoretically be used with any of the previously mentioned models. However being distributed, semantic space vectors are more easily interfaced with the PDP or the self-organising approaches.

ribed. Moreover these models say nothing about the interaction between orthography, semantics and phonology, and hence they should not be seen as lexical access models, but rather as models of how humans build and represent lexical semantic information. The common hypothesis made by the tenants of this approach is that semantic codes are large vectors (they live in a high-dimensional semantic space), computed by more or less complicated co-occurence statistics in the language environment. Examples include Landauer and Dumais (1997)'s LSA model, and more recently Jones and Mewhort (2007)'s Beagle model (left picture). The focus of interest here lies in the internal organisation of the model: the topology of the semantic space -how related word meanings come to be represented in similar patches of the semantic space (the picture shows a 2D-projection of a semantic space in which related words are clustered together). Because they make no commitment as to how lexical access occurs, semantic spaces could theoretically be used with any of the previously mentioned models. However being distributed, semantic space vectors are more easily interfaced with the PDP or the self-organising approaches.

In summary we have a variety of ways to think of lexical semantic information, depending on exactly which findings we wish to account for. Do we seek to explain developmental or adult data? To what extent does neural plausiblity matters? Should we let facts about the brain interfere with our modelling of cognition? -not necessarily if one adheres to "multiple realizability"! What we can say however is that the connectionist approach, despite being a very versatile one, still constitutes the privileged way to describe lexical semantics. The importance of using high-dimensional spaces is also emerging. Also the literature is still highly polarized in the developmental vs mature dimension, with few information exchange taking place between models in each realm.

In another post, I'll try to explain how a less schizophrenic modelling appoach could exist, mediated by self-organising models.

We should be a little more careful in the way we phrase this question.

More specifically, what we want to describe in this post is: "How does the meaning of a word become available upon its (say, visual) presentation?".

There are a number of computational ways to tackle this question, and an important one is the PDP connectionist approach championned by researchers like Jay McClelland, Mark Seidenberg, David Plaut, David Rumelhart and Caralyn Patterson. All PDP models share the assumption that words are mappings between different codes; visual codes, sound codes and meaning codes. In neural network terms, this means that words are embodied in con

nections between different subsystems of units. To my knowledge, the most recent PDP model was proposed by Plaut and Booth (2000) and it uses a fully recurrent network for the meaning subsystem: that is to say, a subnetwork in which all units are connected to each other. Recurrent networks are dynamical systems which exhibit attractor dynamics: the meaning of a word corresponds to an attractor of the system, i.e. a state towards which the system will naturally converge if it starts close enough. The central disk in the picture is an attractor in the state space of a system evolving under discrete time steps: each fan corresponds to many starting states being mapped to one, but only one point (the center of the disk) maps to itself -there's no escaping from it and it attracts all other states.

nections between different subsystems of units. To my knowledge, the most recent PDP model was proposed by Plaut and Booth (2000) and it uses a fully recurrent network for the meaning subsystem: that is to say, a subnetwork in which all units are connected to each other. Recurrent networks are dynamical systems which exhibit attractor dynamics: the meaning of a word corresponds to an attractor of the system, i.e. a state towards which the system will naturally converge if it starts close enough. The central disk in the picture is an attractor in the state space of a system evolving under discrete time steps: each fan corresponds to many starting states being mapped to one, but only one point (the center of the disk) maps to itself -there's no escaping from it and it attracts all other states.

This contrasts with another connectionist variant supported by researchers like Jonathan Grainger, Max Coltheart and Colin Davis to cite a few. These also use neural network models, but words are now envisaged as units by themselves, not as connections between units. Access to a word's meaning is modelled as the activation of a dedicated meaning unit, exactly like access to a word's orthography activates a dedicated orthography unit. Although such systems have been described for instance in Carreiras et al (1997) or Coltheart et al (2001), to my knowledge an implementation has yet to be provided. The structure of such "localist" semantic networks has also been studied more recently, for instance in Steyvers and Tennenbaum (2005) (left picture), but this was done by abstracting away any network dynamics and thus any question about lexical access.

Yet another connectionist approach, more developmental and certainly more easily related to the brain, is the self-organising approach. Again we are talking about mappings b

etween orthographic, semantic and phonological codes, but here codes of each type are supported by particular subsystems called self-organising maps (see right image), and these communicate with one another through hebbian connections. Examples of this approach include Mikkulainen (1993), Li et al (2004) and Mayor and Plunkett (2008). These models take a little bit from both worlds. Indeed, access to meaning corresponds to the apparition of a pattern of activation in the semantic map, an activation pattern which is distributed across all units like in the PDP approach, but which is centered on a so-called "best-matching" one like in the localist approach. These models usually put the emphasis on developmental and neural plausibility, and they are not yet ripe (or not upgraded) for the modeling of adult lexical decision or semantic categorisation tasks.

etween orthographic, semantic and phonological codes, but here codes of each type are supported by particular subsystems called self-organising maps (see right image), and these communicate with one another through hebbian connections. Examples of this approach include Mikkulainen (1993), Li et al (2004) and Mayor and Plunkett (2008). These models take a little bit from both worlds. Indeed, access to meaning corresponds to the apparition of a pattern of activation in the semantic map, an activation pattern which is distributed across all units like in the PDP approach, but which is centered on a so-called "best-matching" one like in the localist approach. These models usually put the emphasis on developmental and neural plausibility, and they are not yet ripe (or not upgraded) for the modeling of adult lexical decision or semantic categorisation tasks.Finally we have semantic space models. These are not connectionist models: they don't need any neural network to be desc

ribed. Moreover these models say nothing about the interaction between orthography, semantics and phonology, and hence they should not be seen as lexical access models, but rather as models of how humans build and represent lexical semantic information. The common hypothesis made by the tenants of this approach is that semantic codes are large vectors (they live in a high-dimensional semantic space), computed by more or less complicated co-occurence statistics in the language environment. Examples include Landauer and Dumais (1997)'s LSA model, and more recently Jones and Mewhort (2007)'s Beagle model (left picture). The focus of interest here lies in the internal organisation of the model: the topology of the semantic space -how related word meanings come to be represented in similar patches of the semantic space (the picture shows a 2D-projection of a semantic space in which related words are clustered together). Because they make no commitment as to how lexical access occurs, semantic spaces could theoretically be used with any of the previously mentioned models. However being distributed, semantic space vectors are more easily interfaced with the PDP or the self-organising approaches.

ribed. Moreover these models say nothing about the interaction between orthography, semantics and phonology, and hence they should not be seen as lexical access models, but rather as models of how humans build and represent lexical semantic information. The common hypothesis made by the tenants of this approach is that semantic codes are large vectors (they live in a high-dimensional semantic space), computed by more or less complicated co-occurence statistics in the language environment. Examples include Landauer and Dumais (1997)'s LSA model, and more recently Jones and Mewhort (2007)'s Beagle model (left picture). The focus of interest here lies in the internal organisation of the model: the topology of the semantic space -how related word meanings come to be represented in similar patches of the semantic space (the picture shows a 2D-projection of a semantic space in which related words are clustered together). Because they make no commitment as to how lexical access occurs, semantic spaces could theoretically be used with any of the previously mentioned models. However being distributed, semantic space vectors are more easily interfaced with the PDP or the self-organising approaches.In summary we have a variety of ways to think of lexical semantic information, depending on exactly which findings we wish to account for. Do we seek to explain developmental or adult data? To what extent does neural plausiblity matters? Should we let facts about the brain interfere with our modelling of cognition? -not necessarily if one adheres to "multiple realizability"! What we can say however is that the connectionist approach, despite being a very versatile one, still constitutes the privileged way to describe lexical semantics. The importance of using high-dimensional spaces is also emerging. Also the literature is still highly polarized in the developmental vs mature dimension, with few information exchange taking place between models in each realm.

In another post, I'll try to explain how a less schizophrenic modelling appoach could exist, mediated by self-organising models.

09/04/2009

Gigantic!

..Well, that is, apart from the "insignificant bit of carbon" part at the end. I think this is a misconception. It reminds me of Stephen Hawking's "chemical scum" statement.. I agree with David Deutsch that this is the product of a limited vision of the human condition !

03/04/2009

Subscribe to:

Comments (Atom)