26/12/2009

23/12/2009

Great inventions #1 -the fireplace

And I don't mean fire, mind you, but literally the fireplace. And please spare me the gospel about how it was the discovery of fire that really started out technology. Fire might have started technology, but I contend fireplaces started civilization!

21/12/2009

Applied cognitive science

I'm not sure one can find anything more pressing than to help educate children all around the world -that is of course, once their basic health is guaranteed. We shouldn't leave this to religion, as is too often the case, and we should do it with the full knowledge our modern times have to offer. That means exploiting cognitive science.

20/12/2009

The big picture!

The universe could have been quite boring..

fortunately it seems its weirdness will always exceed our wildest expectations.

(via Backreaction)

fortunately it seems its weirdness will always exceed our wildest expectations.

(via Backreaction)

13/12/2009

Why I shouldn't like Marseille

- The city's loud and dirty (well what do you expect, it's a port)

- You can spend ages trying to find a place with both an internet connection and a plug

- It is full of excited youngsters dressed in cheap sportswear with the OM colours (local football club)

and yet..

- You can spend ages trying to find a place with both an internet connection and a plug

- It is full of excited youngsters dressed in cheap sportswear with the OM colours (local football club)

and yet..

09/12/2009

The inner language

Cognitive science is a pretty large field, but three questions have been driving a lot of the research effort, almost from the start.

What is language ?

First notice that it is a two-faced coin, there’s external language and internal language. Everybody knows what external language is : it is that particular set of sounds and signs whose shapes and sequences convey some conventional meaning. This convention is formalized into rules, exceptions and templates, and examplified in googles of more or less beautiful instances in everyday life.

What is language ?

First notice that it is a two-faced coin, there’s external language and internal language. Everybody knows what external language is : it is that particular set of sounds and signs whose shapes and sequences convey some conventional meaning. This convention is formalized into rules, exceptions and templates, and examplified in googles of more or less beautiful instances in everyday life.

05/12/2009

What do barnacles, toxoplasmosis and rabies have in common?

These are all hijacking machines.

So instead of digging its own hole in the sand and lay down its eggs, the female sacculine barnacle finds it much more fun to adhere to a green crab's back, slowly move on the shell's surface until it finds a joint, and then send its cells invading the body.

04/12/2009

Religion hurts more than it heals -let's get rid of it.

It hurts. badly. Kills millions, scares more. It is especially hard on women all other the world, and contaminates children shamelessly from a young age. It has infected more than 3/4 of the world's population. I'm not talking about an organic disease, but about religion. This merciless plague is the love of god. Are you religious? Please read more.

26/11/2009

Some standard religious misconceptions (and how to expose them)

- You can't disprove god

Most things cannot be diproved, this doesn't make them real.

The likelihood for the existence of toothfairies, or any kind of supernatural entity, is equally low and can be safely neglected, given that there isn't a hint of evidence for them.

22/11/2009

Beauty and design

Simple idea, great execution.

Also, breathtaking pictures from National Geographic, very nice to look at while listening for instance to Portishead.

(Via Webdesigner depot, where one can find all kinds of interesting things. Here a wonderful pencil sculpture by Jennifer Maestre)

Inspired by all this beauty, my wife and I went on to pull the best late brunch/tea time together EVER !

Also, breathtaking pictures from National Geographic, very nice to look at while listening for instance to Portishead.

(Via Webdesigner depot, where one can find all kinds of interesting things. Here a wonderful pencil sculpture by Jennifer Maestre)

Inspired by all this beauty, my wife and I went on to pull the best late brunch/tea time together EVER !

21/11/2009

*painful post alert* - the Falun Gong genocide

You're peacefully enjoying your first coffee of the day close to place de la Bastille in Paris. Engaging in idle chit-chat with the waitress, anticipating a very nice and surprisingly warm WE. This is when the trumpets and drums kick in.

The Scandal of Probabilities?

According to the late Bertrand Russel, there is a scandal at the root of probability theory: it is the scandal of induction.

Simply stated, the problem is that what has been verified a finite number of times has no reason to be verified beyond these occasions!

Simply stated, the problem is that what has been verified a finite number of times has no reason to be verified beyond these occasions!

14/11/2009

Extirpating religion

Pierrot

Here's another beautiful french song. It's been out for a while, but for some reason I only stumbled upon the music video recently. It's from Loïc Lantoine, who I gather has morphed into a collective of four showmen (?!).

The song tells about friendship and how it can bring solace. In the song, Pierrot never fails to come in times of despair to offer the singer some kind of ineffable support, each time embodied in the delicate and pure guitar arpeggios that end the choruses. The clip actually makes this friend much more ambivalent and imaginary, playing with an old french lullaby.

The song tells about friendship and how it can bring solace. In the song, Pierrot never fails to come in times of despair to offer the singer some kind of ineffable support, each time embodied in the delicate and pure guitar arpeggios that end the choruses. The clip actually makes this friend much more ambivalent and imaginary, playing with an old french lullaby.

27/10/2009

26/10/2009

16/08/2009

SCIENCE vs RELIGION: how to answer the "many scientists are believers in god" argument?

Implying that science and religion are compatible. I would suggest, for a short answer:

"Many physicians also smoke" (yes, even in your country)

Does it have to mean that smoking is good for health?

For a longer refutation, see Sam Harris's recent post. See also Atkins's site for nice quotes!

"Many physicians also smoke" (yes, even in your country)

Does it have to mean that smoking is good for health?

For a longer refutation, see Sam Harris's recent post. See also Atkins's site for nice quotes!

23/04/2009

When plants are watching...

... when birds manipulate humans, and ants can estimate Pi.

Writer Kevin Kelly has a very interesting post on intelligence in nature.

I would recommend to go there for the collection of amazing facts, and the quality of the writing, but I have some reserves on the general vision of intelligence I think he's putting forward.

Writer Kevin Kelly has a very interesting post on intelligence in nature.

I would recommend to go there for the collection of amazing facts, and the quality of the writing, but I have some reserves on the general vision of intelligence I think he's putting forward.

16/04/2009

Finir sa thèse ! (Finishing one's thesis)

Beautiful lyrics, brilliant and fun (mmm..chords borrowed from Blu Cantrell it seems)

Le Minotaure

The author/singer/guitar player, Simon Berjeaut, is just ridiculously talented.

There is definitly some Brassens here, and a touch of Renaud but without the crude voice and lyrics.

Taken together with some recent discoveries heard on this radio, no, really, I think there's hope again for French music !

Le Minotaure

The author/singer/guitar player, Simon Berjeaut, is just ridiculously talented.

There is definitly some Brassens here, and a touch of Renaud but without the crude voice and lyrics.

Taken together with some recent discoveries heard on this radio, no, really, I think there's hope again for French music !

13/04/2009

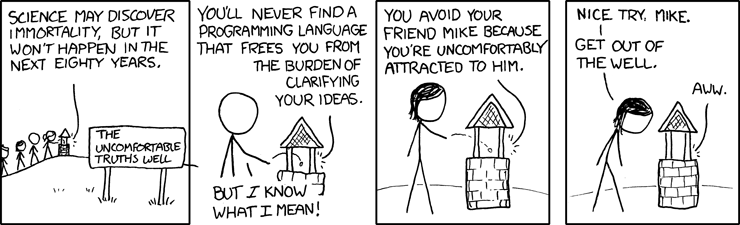

xkcd, graciously smart..

..and "a treasured and carefully-guarded point in the space of four-character strings" according to its creator Randall Munroe.

12/04/2009

Lexical semantics (3/4)

How does it work?

We should be a little more careful in the way we phrase this question.

More specifically, what we want to describe in this post is: "How does the meaning of a word become available upon its (say, visual) presentation?".

There are a number of computational ways to tackle this question, and an important one is the PDP connectionist approach championned by researchers like Jay McClelland, Mark Seidenberg, David Plaut, David Rumelhart and Caralyn Patterson. All PDP models share the assumption that words are mappings between different codes; visual codes, sound codes and meaning codes. In neural network terms, this means that words are embodied in con nections between different subsystems of units. To my knowledge, the most recent PDP model was proposed by Plaut and Booth (2000) and it uses a fully recurrent network for the meaning subsystem: that is to say, a subnetwork in which all units are connected to each other. Recurrent networks are dynamical systems which exhibit attractor dynamics: the meaning of a word corresponds to an attractor of the system, i.e. a state towards which the system will naturally converge if it starts close enough. The central disk in the picture is an attractor in the state space of a system evolving under discrete time steps: each fan corresponds to many starting states being mapped to one, but only one point (the center of the disk) maps to itself -there's no escaping from it and it attracts all other states.

nections between different subsystems of units. To my knowledge, the most recent PDP model was proposed by Plaut and Booth (2000) and it uses a fully recurrent network for the meaning subsystem: that is to say, a subnetwork in which all units are connected to each other. Recurrent networks are dynamical systems which exhibit attractor dynamics: the meaning of a word corresponds to an attractor of the system, i.e. a state towards which the system will naturally converge if it starts close enough. The central disk in the picture is an attractor in the state space of a system evolving under discrete time steps: each fan corresponds to many starting states being mapped to one, but only one point (the center of the disk) maps to itself -there's no escaping from it and it attracts all other states.

This contrasts with another connectionist variant supported by researchers like Jonathan Grainger, Max Coltheart and Colin Davis to cite a few. These also use neural network models, but words are now envisaged as units by themselves, not as connections between units. Access to a word's meaning is modelled as the activation of a dedicated meaning unit, exactly like access to a word's orthography activates a dedicated orthography unit. Although such systems have been described for instance in Carreiras et al (1997) or Coltheart et al (2001), to my knowledge an implementation has yet to be provided. The structure of such "localist" semantic networks has also been studied more recently, for instance in Steyvers and Tennenbaum (2005) (left picture), but this was done by abstracting away any network dynamics and thus any question about lexical access.

Yet another connectionist approach, more developmental and certainly more easily related to the brain, is the self-organising approach. Again we are talking about mappings b etween orthographic, semantic and phonological codes, but here codes of each type are supported by particular subsystems called self-organising maps (see right image), and these communicate with one another through hebbian connections. Examples of this approach include Mikkulainen (1993), Li et al (2004) and Mayor and Plunkett (2008). These models take a little bit from both worlds. Indeed, access to meaning corresponds to the apparition of a pattern of activation in the semantic map, an activation pattern which is distributed across all units like in the PDP approach, but which is centered on a so-called "best-matching" one like in the localist approach. These models usually put the emphasis on developmental and neural plausibility, and they are not yet ripe (or not upgraded) for the modeling of adult lexical decision or semantic categorisation tasks.

etween orthographic, semantic and phonological codes, but here codes of each type are supported by particular subsystems called self-organising maps (see right image), and these communicate with one another through hebbian connections. Examples of this approach include Mikkulainen (1993), Li et al (2004) and Mayor and Plunkett (2008). These models take a little bit from both worlds. Indeed, access to meaning corresponds to the apparition of a pattern of activation in the semantic map, an activation pattern which is distributed across all units like in the PDP approach, but which is centered on a so-called "best-matching" one like in the localist approach. These models usually put the emphasis on developmental and neural plausibility, and they are not yet ripe (or not upgraded) for the modeling of adult lexical decision or semantic categorisation tasks.

Finally we have semantic space models. These are not connectionist models: they don't need any neural network to be desc ribed. Moreover these models say nothing about the interaction between orthography, semantics and phonology, and hence they should not be seen as lexical access models, but rather as models of how humans build and represent lexical semantic information. The common hypothesis made by the tenants of this approach is that semantic codes are large vectors (they live in a high-dimensional semantic space), computed by more or less complicated co-occurence statistics in the language environment. Examples include Landauer and Dumais (1997)'s LSA model, and more recently Jones and Mewhort (2007)'s Beagle model (left picture). The focus of interest here lies in the internal organisation of the model: the topology of the semantic space -how related word meanings come to be represented in similar patches of the semantic space (the picture shows a 2D-projection of a semantic space in which related words are clustered together). Because they make no commitment as to how lexical access occurs, semantic spaces could theoretically be used with any of the previously mentioned models. However being distributed, semantic space vectors are more easily interfaced with the PDP or the self-organising approaches.

ribed. Moreover these models say nothing about the interaction between orthography, semantics and phonology, and hence they should not be seen as lexical access models, but rather as models of how humans build and represent lexical semantic information. The common hypothesis made by the tenants of this approach is that semantic codes are large vectors (they live in a high-dimensional semantic space), computed by more or less complicated co-occurence statistics in the language environment. Examples include Landauer and Dumais (1997)'s LSA model, and more recently Jones and Mewhort (2007)'s Beagle model (left picture). The focus of interest here lies in the internal organisation of the model: the topology of the semantic space -how related word meanings come to be represented in similar patches of the semantic space (the picture shows a 2D-projection of a semantic space in which related words are clustered together). Because they make no commitment as to how lexical access occurs, semantic spaces could theoretically be used with any of the previously mentioned models. However being distributed, semantic space vectors are more easily interfaced with the PDP or the self-organising approaches.

In summary we have a variety of ways to think of lexical semantic information, depending on exactly which findings we wish to account for. Do we seek to explain developmental or adult data? To what extent does neural plausiblity matters? Should we let facts about the brain interfere with our modelling of cognition? -not necessarily if one adheres to "multiple realizability"! What we can say however is that the connectionist approach, despite being a very versatile one, still constitutes the privileged way to describe lexical semantics. The importance of using high-dimensional spaces is also emerging. Also the literature is still highly polarized in the developmental vs mature dimension, with few information exchange taking place between models in each realm.

In another post, I'll try to explain how a less schizophrenic modelling appoach could exist, mediated by self-organising models.

We should be a little more careful in the way we phrase this question.

More specifically, what we want to describe in this post is: "How does the meaning of a word become available upon its (say, visual) presentation?".

There are a number of computational ways to tackle this question, and an important one is the PDP connectionist approach championned by researchers like Jay McClelland, Mark Seidenberg, David Plaut, David Rumelhart and Caralyn Patterson. All PDP models share the assumption that words are mappings between different codes; visual codes, sound codes and meaning codes. In neural network terms, this means that words are embodied in con

nections between different subsystems of units. To my knowledge, the most recent PDP model was proposed by Plaut and Booth (2000) and it uses a fully recurrent network for the meaning subsystem: that is to say, a subnetwork in which all units are connected to each other. Recurrent networks are dynamical systems which exhibit attractor dynamics: the meaning of a word corresponds to an attractor of the system, i.e. a state towards which the system will naturally converge if it starts close enough. The central disk in the picture is an attractor in the state space of a system evolving under discrete time steps: each fan corresponds to many starting states being mapped to one, but only one point (the center of the disk) maps to itself -there's no escaping from it and it attracts all other states.

nections between different subsystems of units. To my knowledge, the most recent PDP model was proposed by Plaut and Booth (2000) and it uses a fully recurrent network for the meaning subsystem: that is to say, a subnetwork in which all units are connected to each other. Recurrent networks are dynamical systems which exhibit attractor dynamics: the meaning of a word corresponds to an attractor of the system, i.e. a state towards which the system will naturally converge if it starts close enough. The central disk in the picture is an attractor in the state space of a system evolving under discrete time steps: each fan corresponds to many starting states being mapped to one, but only one point (the center of the disk) maps to itself -there's no escaping from it and it attracts all other states.

This contrasts with another connectionist variant supported by researchers like Jonathan Grainger, Max Coltheart and Colin Davis to cite a few. These also use neural network models, but words are now envisaged as units by themselves, not as connections between units. Access to a word's meaning is modelled as the activation of a dedicated meaning unit, exactly like access to a word's orthography activates a dedicated orthography unit. Although such systems have been described for instance in Carreiras et al (1997) or Coltheart et al (2001), to my knowledge an implementation has yet to be provided. The structure of such "localist" semantic networks has also been studied more recently, for instance in Steyvers and Tennenbaum (2005) (left picture), but this was done by abstracting away any network dynamics and thus any question about lexical access.

Yet another connectionist approach, more developmental and certainly more easily related to the brain, is the self-organising approach. Again we are talking about mappings b

etween orthographic, semantic and phonological codes, but here codes of each type are supported by particular subsystems called self-organising maps (see right image), and these communicate with one another through hebbian connections. Examples of this approach include Mikkulainen (1993), Li et al (2004) and Mayor and Plunkett (2008). These models take a little bit from both worlds. Indeed, access to meaning corresponds to the apparition of a pattern of activation in the semantic map, an activation pattern which is distributed across all units like in the PDP approach, but which is centered on a so-called "best-matching" one like in the localist approach. These models usually put the emphasis on developmental and neural plausibility, and they are not yet ripe (or not upgraded) for the modeling of adult lexical decision or semantic categorisation tasks.

etween orthographic, semantic and phonological codes, but here codes of each type are supported by particular subsystems called self-organising maps (see right image), and these communicate with one another through hebbian connections. Examples of this approach include Mikkulainen (1993), Li et al (2004) and Mayor and Plunkett (2008). These models take a little bit from both worlds. Indeed, access to meaning corresponds to the apparition of a pattern of activation in the semantic map, an activation pattern which is distributed across all units like in the PDP approach, but which is centered on a so-called "best-matching" one like in the localist approach. These models usually put the emphasis on developmental and neural plausibility, and they are not yet ripe (or not upgraded) for the modeling of adult lexical decision or semantic categorisation tasks.Finally we have semantic space models. These are not connectionist models: they don't need any neural network to be desc

ribed. Moreover these models say nothing about the interaction between orthography, semantics and phonology, and hence they should not be seen as lexical access models, but rather as models of how humans build and represent lexical semantic information. The common hypothesis made by the tenants of this approach is that semantic codes are large vectors (they live in a high-dimensional semantic space), computed by more or less complicated co-occurence statistics in the language environment. Examples include Landauer and Dumais (1997)'s LSA model, and more recently Jones and Mewhort (2007)'s Beagle model (left picture). The focus of interest here lies in the internal organisation of the model: the topology of the semantic space -how related word meanings come to be represented in similar patches of the semantic space (the picture shows a 2D-projection of a semantic space in which related words are clustered together). Because they make no commitment as to how lexical access occurs, semantic spaces could theoretically be used with any of the previously mentioned models. However being distributed, semantic space vectors are more easily interfaced with the PDP or the self-organising approaches.

ribed. Moreover these models say nothing about the interaction between orthography, semantics and phonology, and hence they should not be seen as lexical access models, but rather as models of how humans build and represent lexical semantic information. The common hypothesis made by the tenants of this approach is that semantic codes are large vectors (they live in a high-dimensional semantic space), computed by more or less complicated co-occurence statistics in the language environment. Examples include Landauer and Dumais (1997)'s LSA model, and more recently Jones and Mewhort (2007)'s Beagle model (left picture). The focus of interest here lies in the internal organisation of the model: the topology of the semantic space -how related word meanings come to be represented in similar patches of the semantic space (the picture shows a 2D-projection of a semantic space in which related words are clustered together). Because they make no commitment as to how lexical access occurs, semantic spaces could theoretically be used with any of the previously mentioned models. However being distributed, semantic space vectors are more easily interfaced with the PDP or the self-organising approaches.In summary we have a variety of ways to think of lexical semantic information, depending on exactly which findings we wish to account for. Do we seek to explain developmental or adult data? To what extent does neural plausiblity matters? Should we let facts about the brain interfere with our modelling of cognition? -not necessarily if one adheres to "multiple realizability"! What we can say however is that the connectionist approach, despite being a very versatile one, still constitutes the privileged way to describe lexical semantics. The importance of using high-dimensional spaces is also emerging. Also the literature is still highly polarized in the developmental vs mature dimension, with few information exchange taking place between models in each realm.

In another post, I'll try to explain how a less schizophrenic modelling appoach could exist, mediated by self-organising models.

09/04/2009

Gigantic!

..Well, that is, apart from the "insignificant bit of carbon" part at the end. I think this is a misconception. It reminds me of Stephen Hawking's "chemical scum" statement.. I agree with David Deutsch that this is the product of a limited vision of the human condition !

03/04/2009

24/03/2009

Lexical semantics (2/4)

Let us work our way up to the next question.

Is it located at any given place in the brain?

Well, at first the answer seemed to be yes. Wernicke's area has been recognized as early as in 1874 to be associated with word meaning. Patients with lesions in this area are afflicted with a kind of condition which leaves them unable to grasp the sense of words or to produce meaningfull speech, though importantly they are essentially unimpaired in other cognitive functions. Wernicke's area is close to the auditory cortex, but the associated deficit is not limited to the oral modality and extends to written language comprehension.

But of course and as often in cognitive science, things are a great deal more complicated. Researchers now agree that semantic retrieval involves at least a network of distant regions lying in the left frontal and temporal lobes, as suggested by the picture on the right (see this 2004 brain imagery study from Noppeney et al and this Nature neuroscience review from Patterson et al -both feature authoritative collaborators).

Characterizing the neural correlates of semantics remains work in progress, and as a matter of fact entire research labs are commited to this pursuit. Let me point to one important lab at the university of Wisconsin, which should also get the credit for the artwork.

Is it located at any given place in the brain?

Well, at first the answer seemed to be yes. Wernicke's area has been recognized as early as in 1874 to be associated with word meaning. Patients with lesions in this area are afflicted with a kind of condition which leaves them unable to grasp the sense of words or to produce meaningfull speech, though importantly they are essentially unimpaired in other cognitive functions. Wernicke's area is close to the auditory cortex, but the associated deficit is not limited to the oral modality and extends to written language comprehension.

But of course and as often in cognitive science, things are a great deal more complicated. Researchers now agree that semantic retrieval involves at least a network of distant regions lying in the left frontal and temporal lobes, as suggested by the picture on the right (see this 2004 brain imagery study from Noppeney et al and this Nature neuroscience review from Patterson et al -both feature authoritative collaborators).

Characterizing the neural correlates of semantics remains work in progress, and as a matter of fact entire research labs are commited to this pursuit. Let me point to one important lab at the university of Wisconsin, which should also get the credit for the artwork.

23/03/2009

Lexical semantics (1/4)

What we cognitive scientists usually mean by lexical semantics is the system responsible for storing the meaning of words. How is this system organised? How does it develop ? How does it work? And is it located anywhere in the brain in particular? How can we know? Let's start with the last question.

How can we know?

Well cognitive science uses three main classes of tools to probe the human mind: behavioral experiments, brain imagery and computational models. There is of course a continual conversation going on between the three. Behavioral experiments ask each subject in a large group to operate a simple task in controled and reproducible laboratory conditions. Responses (usually motor responses) are then treated statistically and in this way significant effects can stand out. Hypotheses are suported or disproved.

A most common behavioral experiment in lexical semantics would be semantic categorization, in which upon presentation of a word on the screen, subjects are being asked whether it belongs to one category (is this bigger than a brick?) or not. What we look at: reaction times and percent errors. You can see that the idea is to plug our measuring apparatus to the simplest and hopefully the most objective signal possible.

Brain imagery simply tries to push the logic forward: we plug our measuring apparatus directly to the brain (non-invasively of course). The brain emits all kinds of signals strong enough to be picked-up at the surface of our skulls. There are many ways to do that: EEG, or MEG, another is PET, but I guess fMRI would be the most used brain imaging technique to date. All techniques have their strengths and weaknesses, for instance they are more or less accurate as for the spatial or temporal qualities of the signal.

Once behavioral and brain imagery studies are available, computational models try to make sense of the data. Computational models can be more biologically or psychologically oriented. A good balance in this respect seems to have been found early on by connectionism, which I for one define as the minimal concession to the hardware that one should acknowledge when modeling the software: using a large number of simple units connected to one another and which can be more or less active.

The explanatory power of a computational model is, as usual, given by the number of facts it can explain relative to the number of hypotheses it makes. But explanatory power is not the whole story, one would also like a model to make predictions which could be further tested with behavioral or brain studies -we want our models to be falsifiable.

How can we know?

Well cognitive science uses three main classes of tools to probe the human mind: behavioral experiments, brain imagery and computational models. There is of course a continual conversation going on between the three. Behavioral experiments ask each subject in a large group to operate a simple task in controled and reproducible laboratory conditions. Responses (usually motor responses) are then treated statistically and in this way significant effects can stand out. Hypotheses are suported or disproved.

A most common behavioral experiment in lexical semantics would be semantic categorization, in which upon presentation of a word on the screen, subjects are being asked whether it belongs to one category (is this bigger than a brick?) or not. What we look at: reaction times and percent errors. You can see that the idea is to plug our measuring apparatus to the simplest and hopefully the most objective signal possible.

Brain imagery simply tries to push the logic forward: we plug our measuring apparatus directly to the brain (non-invasively of course). The brain emits all kinds of signals strong enough to be picked-up at the surface of our skulls. There are many ways to do that: EEG, or MEG, another is PET, but I guess fMRI would be the most used brain imaging technique to date. All techniques have their strengths and weaknesses, for instance they are more or less accurate as for the spatial or temporal qualities of the signal.

Once behavioral and brain imagery studies are available, computational models try to make sense of the data. Computational models can be more biologically or psychologically oriented. A good balance in this respect seems to have been found early on by connectionism, which I for one define as the minimal concession to the hardware that one should acknowledge when modeling the software: using a large number of simple units connected to one another and which can be more or less active.

The explanatory power of a computational model is, as usual, given by the number of facts it can explain relative to the number of hypotheses it makes. But explanatory power is not the whole story, one would also like a model to make predictions which could be further tested with behavioral or brain studies -we want our models to be falsifiable.

18/03/2009

14/03/2009

This just in

Ouch. Mountazer Al-Zaïdi, the Iraqi journalist famous for his wonderful shoe throwing at Bush, has been sentenced to three years in jail.. (french article).

I hope he will eventually benefit from some kind of amnesty. The guy is huge in Irak and elsewhere, and presumably the pressure of international media could help. In fact we can help right now.

It takes a lot of courage to do what he did, and three years is quite a lot of time.

I hope he will eventually benefit from some kind of amnesty. The guy is huge in Irak and elsewhere, and presumably the pressure of international media could help. In fact we can help right now.

It takes a lot of courage to do what he did, and three years is quite a lot of time.

13/03/2009

Links of the day

Coachsurfing seems like a nice way to travel, since one is directly plugged into the life of local people, and the network has now reached 10^6 of them.

Although..we have friends in a similar network, globalfreeloader and they have not always been exstatic about it.

Perhaps there's an equivalent community but in which one doesn't have to share one's flat..? Well sure enough there is -ahhh, how I love the 21st century!

Although..we have friends in a similar network, globalfreeloader and they have not always been exstatic about it.

Perhaps there's an equivalent community but in which one doesn't have to share one's flat..? Well sure enough there is -ahhh, how I love the 21st century!

12/03/2009

Working memory and IQ

Nice article on the times today about brain training.

The authors quickly jump to working memory, and present recent experiments with subjects whose IQ scores increase with the amount of training they get in a dual n-back task. Another group of subjects, without training, did not improve their scores.

These results are interesting, but the authors correctly point out that the control condition is not appropriate to conclude in favor of the involvment of workng memory.

The authors quickly jump to working memory, and present recent experiments with subjects whose IQ scores increase with the amount of training they get in a dual n-back task. Another group of subjects, without training, did not improve their scores.

These results are interesting, but the authors correctly point out that the control condition is not appropriate to conclude in favor of the involvment of workng memory.

09/03/2009

Sampling performance

Kutiman has done some really spectacular sampling job, exclusively based on you tube videos.

In addition to his technical mastery, he seems to be just as confortable with funk, ragga, jungle, soul etc... It is a very generous work. As for me, I especially like sample 3, at 4:36. (via Cédric)

On a totally different subject, there is also a very nice post over at "When in doubt, do", where we learn that the afore mentioned Charles Darwin was a list maker, and mind you, for not so trivial matters!

In addition to his technical mastery, he seems to be just as confortable with funk, ragga, jungle, soul etc... It is a very generous work. As for me, I especially like sample 3, at 4:36. (via Cédric)

On a totally different subject, there is also a very nice post over at "When in doubt, do", where we learn that the afore mentioned Charles Darwin was a list maker, and mind you, for not so trivial matters!

08/03/2009

06/03/2009

Growing maps

I'm currently looking a little bit closer at growing self-organising maps for a grant proposal. These are derived from the famous Kohonen map, a kind of neural network which establishes a correspondence between a high dimensional input space and a lower dimensional one (almost always 2 dimensions, but not necessarily). Whereas soms work with a fixed number of nodes, growing soms unfold with time. This makes them useful for e.g. language acquisition modeling (especially since these are unsupervised networks).

05/03/2009

Wonderful memes

We had a splendid dinner yesterday night at the Butte-aux-cailles with some family.

The occasion was my recent PhD defense, but we also discussed tons of other subjects, especially in relation to history and art. Knowledge flows from my grand-aunt and uncle like water from a source: crystalline, distilled and enriched by years of travels. I should add that both have very impressive carreers behind them, at the Louvre for my grand-aunt and as a teacher/civil servant at the ministry of Industry for my grand-uncle.

What may have triggered it all, this time, was my not so innocent trivia that Rome, Istanbul and Paris are said to have seven hills each. After a quick count we were only able to locate 6 hills in Paris, 2 in Istanbul and 5 in Rome -well it seems Paris really has only six.

Then all bets were off, and in no time we were discussing travels, hearing about unexpected adventures, being told about grandiose peaces of architecture and to my delight simply submerged under roman history. The successive emperors Trajan, Hadrian and Marcus-Aurelius were of particular interest, and we wondered whether or not Hadrian was right to let one piece of the empire go -soon enough we agreed he was.

The occasion was my recent PhD defense, but we also discussed tons of other subjects, especially in relation to history and art. Knowledge flows from my grand-aunt and uncle like water from a source: crystalline, distilled and enriched by years of travels. I should add that both have very impressive carreers behind them, at the Louvre for my grand-aunt and as a teacher/civil servant at the ministry of Industry for my grand-uncle.

What may have triggered it all, this time, was my not so innocent trivia that Rome, Istanbul and Paris are said to have seven hills each. After a quick count we were only able to locate 6 hills in Paris, 2 in Istanbul and 5 in Rome -well it seems Paris really has only six.

Then all bets were off, and in no time we were discussing travels, hearing about unexpected adventures, being told about grandiose peaces of architecture and to my delight simply submerged under roman history. The successive emperors Trajan, Hadrian and Marcus-Aurelius were of particular interest, and we wondered whether or not Hadrian was right to let one piece of the empire go -soon enough we agreed he was.

03/03/2009

Swimming

There is something with swimming...which I simply don't experience with any other sports.

Working out at the Gym is pretty much about what the name indicates: work. No real fun involved -except perhaps sometimes when practiced in tandem. Running is not very fun either, but at least one can enjoy the landscape and after a while a kind of meditation can take place -like a discourse between mental and physical abilities- which is really great. But only by swimming do I entirely switch off from a day of work.

This is because suddenly and for an hour or so, I don't operate in the same element anymore. Sounds have this strange distortion caused by their propagation in the liquid medium, light is diffracted, water exerts more pressure on my skin, but my weight is reduced and my balance modified -these all conspire to reset the cognitive machinery. I always come out of swimming with an open mind and clear thoughts.

Ok, off to the swimming pool! By the way, I'd like to try an endless one some day.

Working out at the Gym is pretty much about what the name indicates: work. No real fun involved -except perhaps sometimes when practiced in tandem. Running is not very fun either, but at least one can enjoy the landscape and after a while a kind of meditation can take place -like a discourse between mental and physical abilities- which is really great. But only by swimming do I entirely switch off from a day of work.

This is because suddenly and for an hour or so, I don't operate in the same element anymore. Sounds have this strange distortion caused by their propagation in the liquid medium, light is diffracted, water exerts more pressure on my skin, but my weight is reduced and my balance modified -these all conspire to reset the cognitive machinery. I always come out of swimming with an open mind and clear thoughts.

Ok, off to the swimming pool! By the way, I'd like to try an endless one some day.

02/03/2009

Paris!

01/03/2009

What's catastrophic forgetting?

I should describe what catastrophic forgetting means in the context of cognitive science. Well, you'd think there would be a wikipedia entry for this but there's not -should probably go and write it down myself, meanwhile here's a nice introduction paper.

Anyway, catastrophic forgetting is the phenomenon by which some neural networks completely forget past memories when exposed to a set of new ones.

Naturally, this has been of some concerns for proponents of such systems which after all aim at simulating human memory functions. Humans do not, under normal circumstances, show this behavior. However it has been suggested and pretty convincingly argued that interleaved learning could circumvent the issue, at least in feed-forward networks trained with the backpropagation rule.

There is also a more catastrophic sense in which the notion has been used, and it is to describe the complete loss of any memories, past or recent, that occurs in e.g. the Hopfield network when exposed to undue numbers of patterns (interested in reading a recent thesis on the subject?). This is a subject I find utterly fascinating, having such ramifications as the function of dream sleep and palimpsest learning. More on that later.

Anyway, catastrophic forgetting is the phenomenon by which some neural networks completely forget past memories when exposed to a set of new ones.

Naturally, this has been of some concerns for proponents of such systems which after all aim at simulating human memory functions. Humans do not, under normal circumstances, show this behavior. However it has been suggested and pretty convincingly argued that interleaved learning could circumvent the issue, at least in feed-forward networks trained with the backpropagation rule.

There is also a more catastrophic sense in which the notion has been used, and it is to describe the complete loss of any memories, past or recent, that occurs in e.g. the Hopfield network when exposed to undue numbers of patterns (interested in reading a recent thesis on the subject?). This is a subject I find utterly fascinating, having such ramifications as the function of dream sleep and palimpsest learning. More on that later.

28/02/2009

Incipit

Birth of this blog!

Catastrophic forgetting is intended as a window into the life of a cognitive scientist, featuring miscellaneous and more or less personnal thoughts, as well as regular reports on what's going on in computational cognitive modeling.

Catastrophic forgetting is intended as a window into the life of a cognitive scientist, featuring miscellaneous and more or less personnal thoughts, as well as regular reports on what's going on in computational cognitive modeling.

Subscribe to:

Comments (Atom)